In January 2026, several interviews with CEOs of top AI labs were released. One particularly fascinating encounter was the face-to-face interview (1) between Anthropic CEO Dario Amodei and Google DeepMind CEO Demis Hassabis. I have summarized my thoughts on what their comments imply. I hope you find this insightful!

1. Will AGI Arrive Within 2 Years?

Dario seems to hold a more accelerated timeline for the realization of AGI. While prefixing his thoughts with "It is difficult to predict exactly when it will happen," he pointed to the reality within his own company: "There are already engineers at Anthropic who say they no longer write code themselves. In the next 6 to 12 months, AI might handle the majority of code development. I feel that loop is closing rapidly." He argued that AI development is hitting a flywheel effect, particularly noting that progress in coding and research is so remarkable that AI intelligence will surpass public expectations within a few short years.

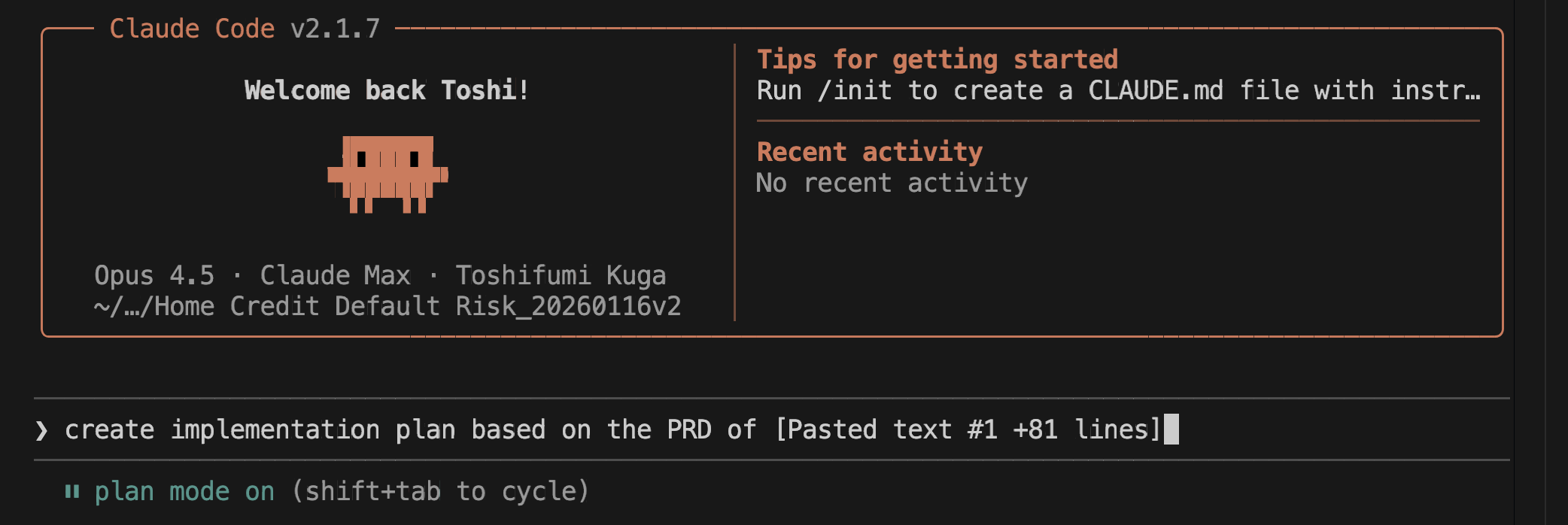

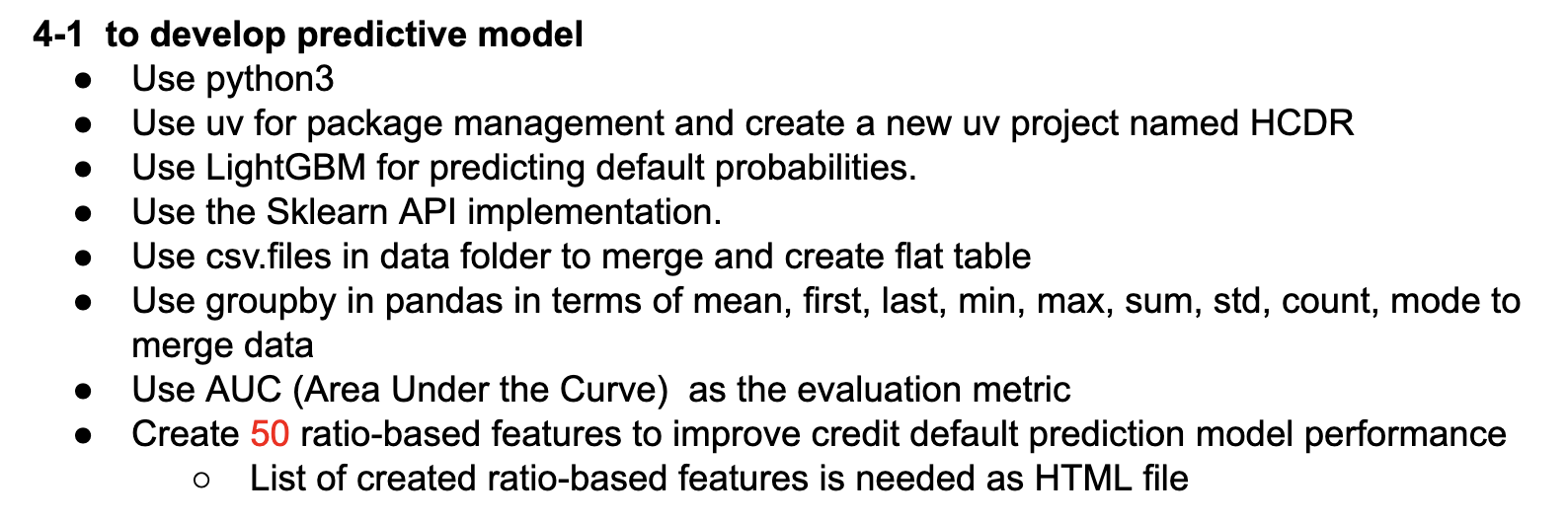

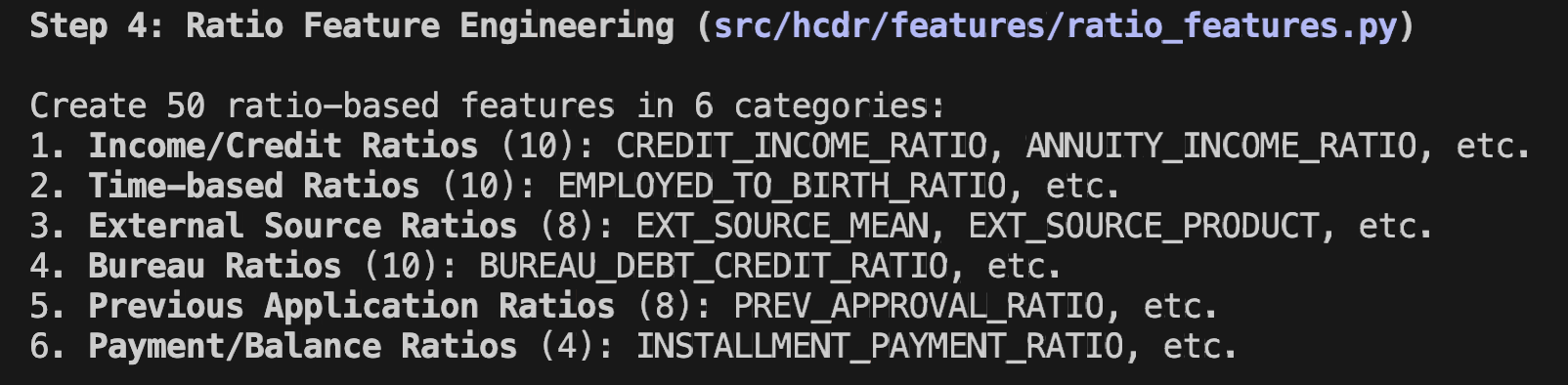

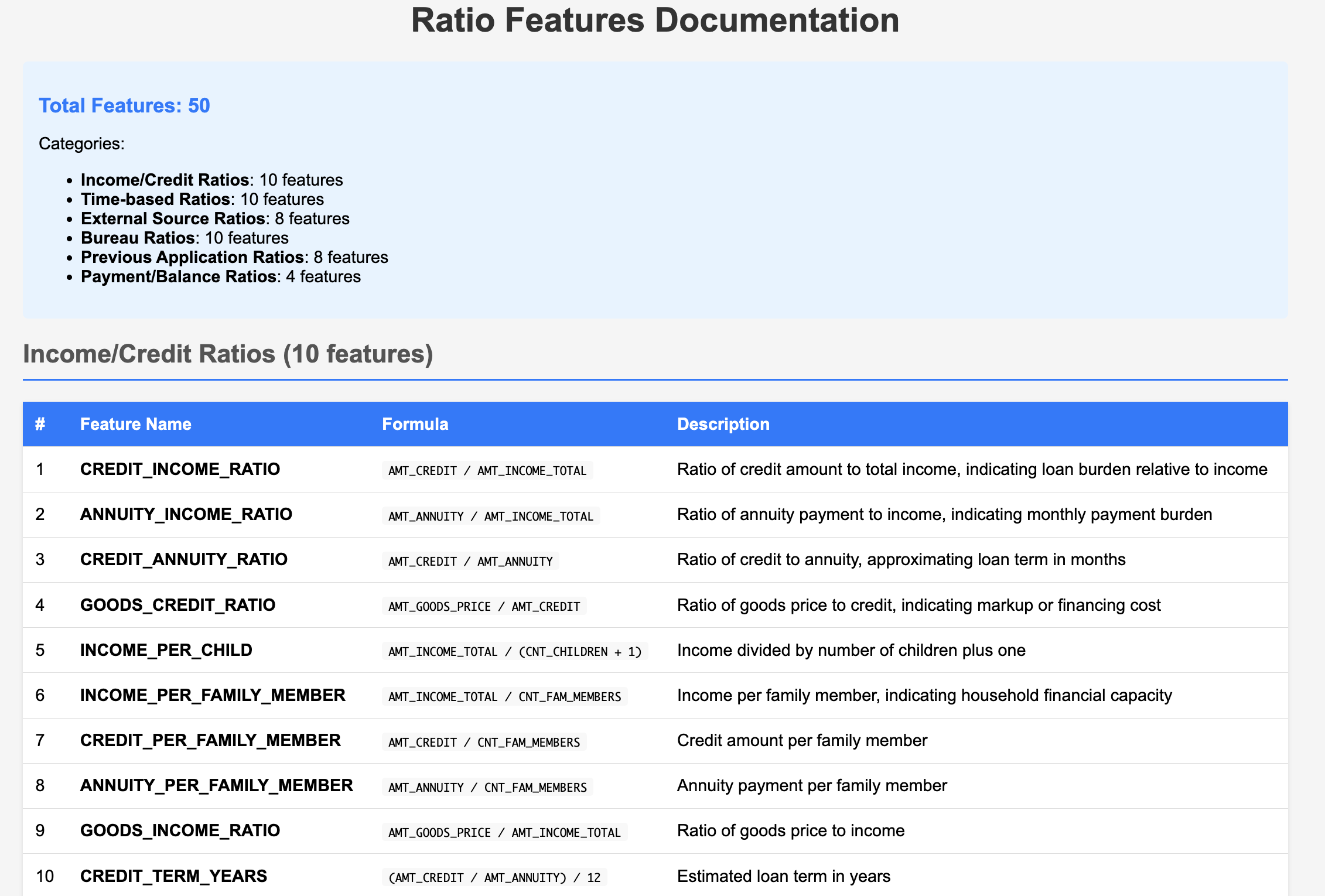

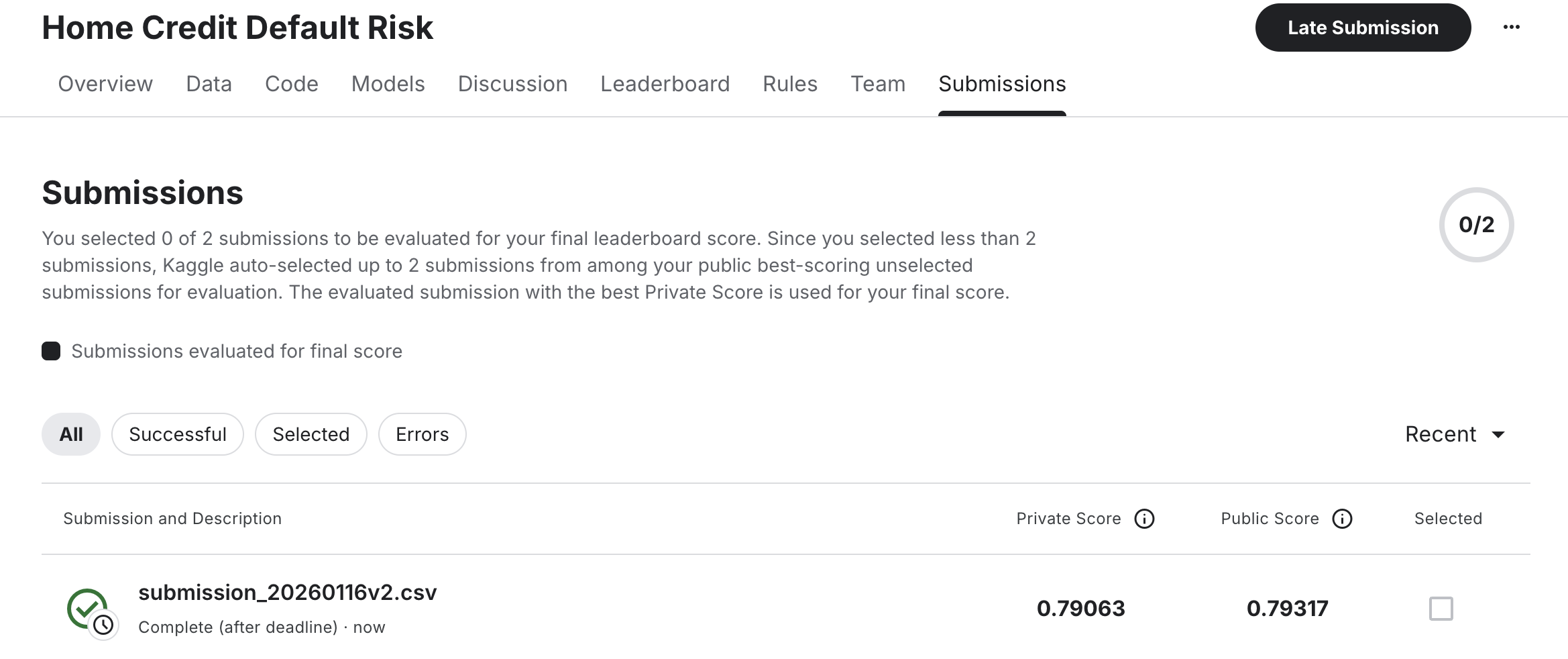

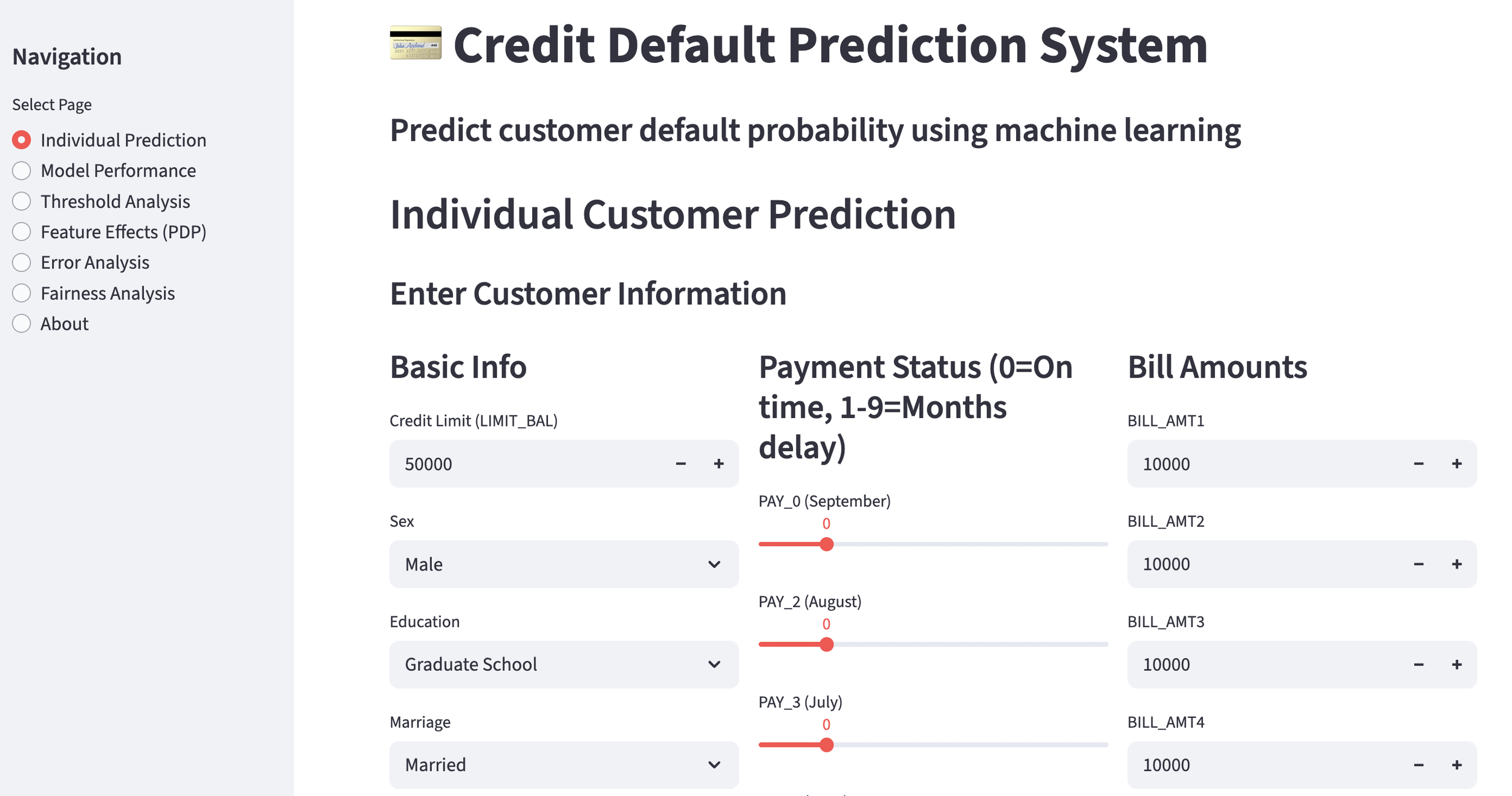

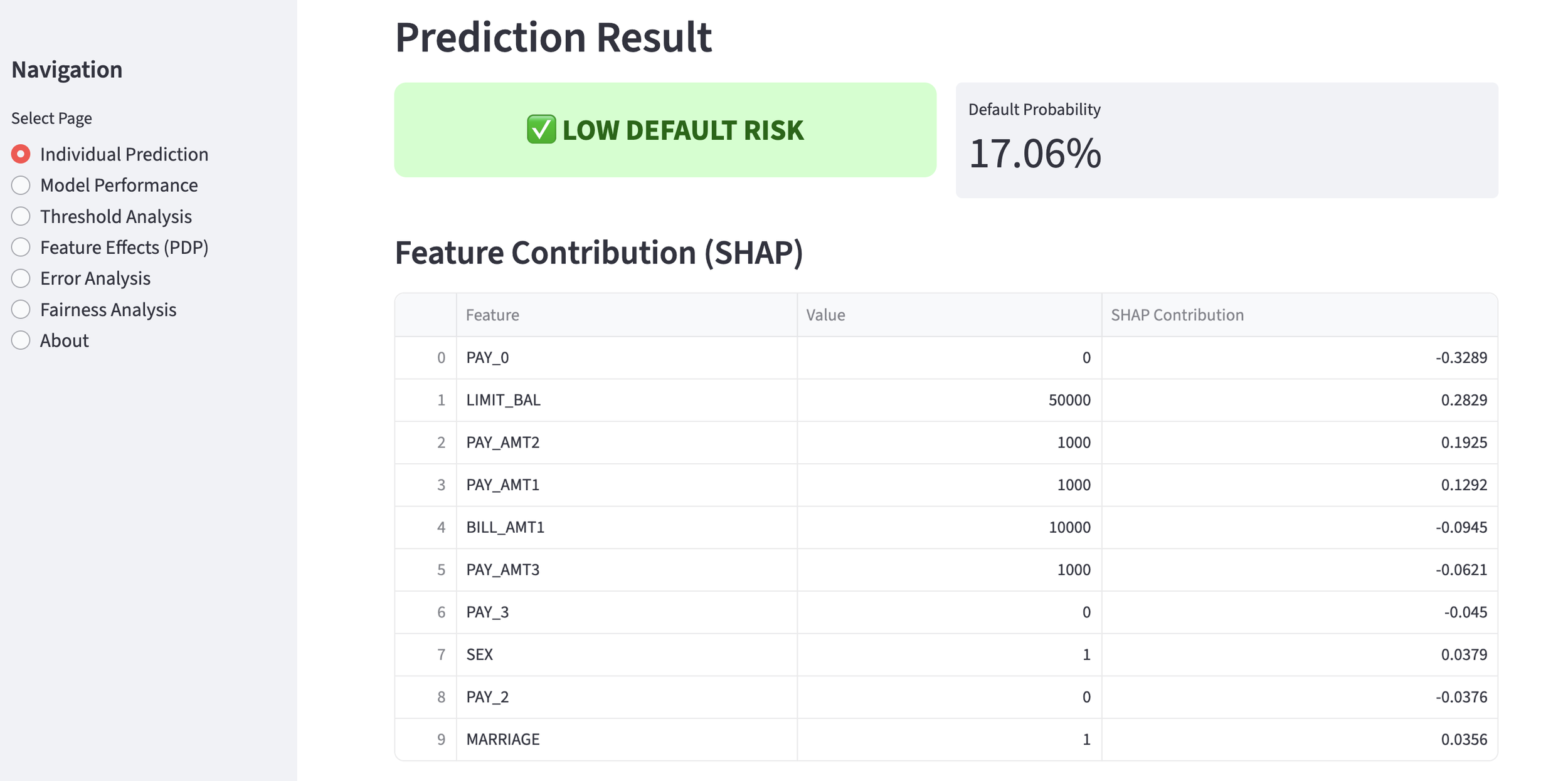

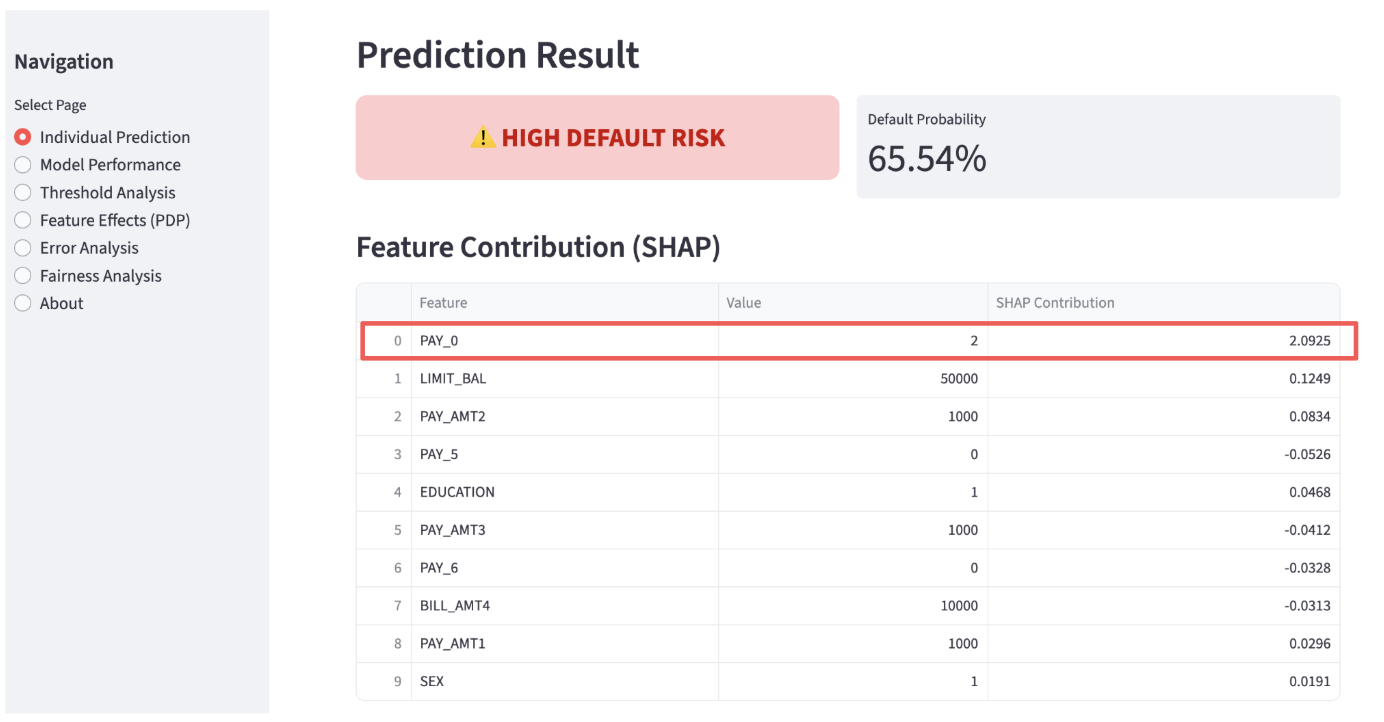

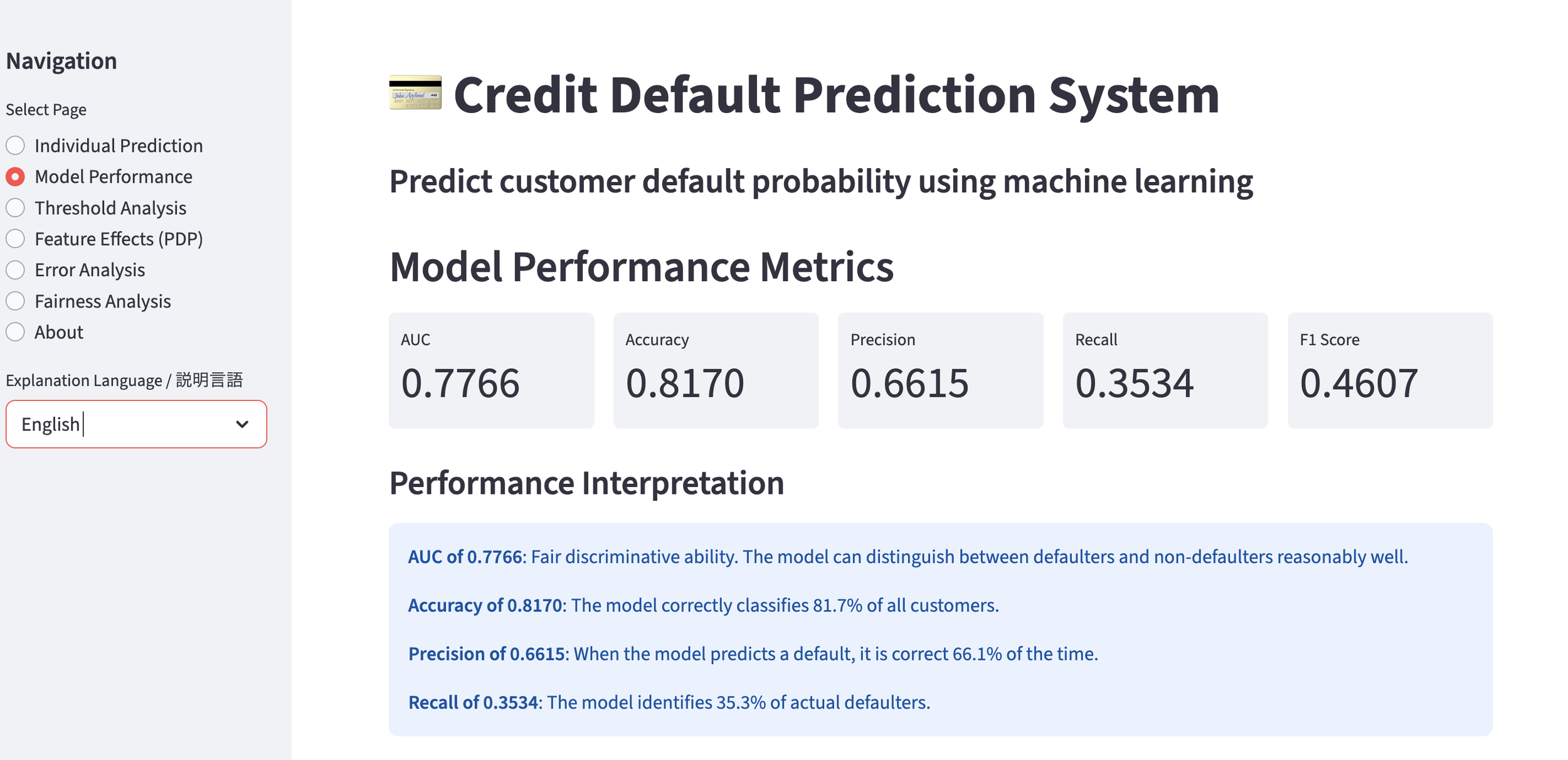

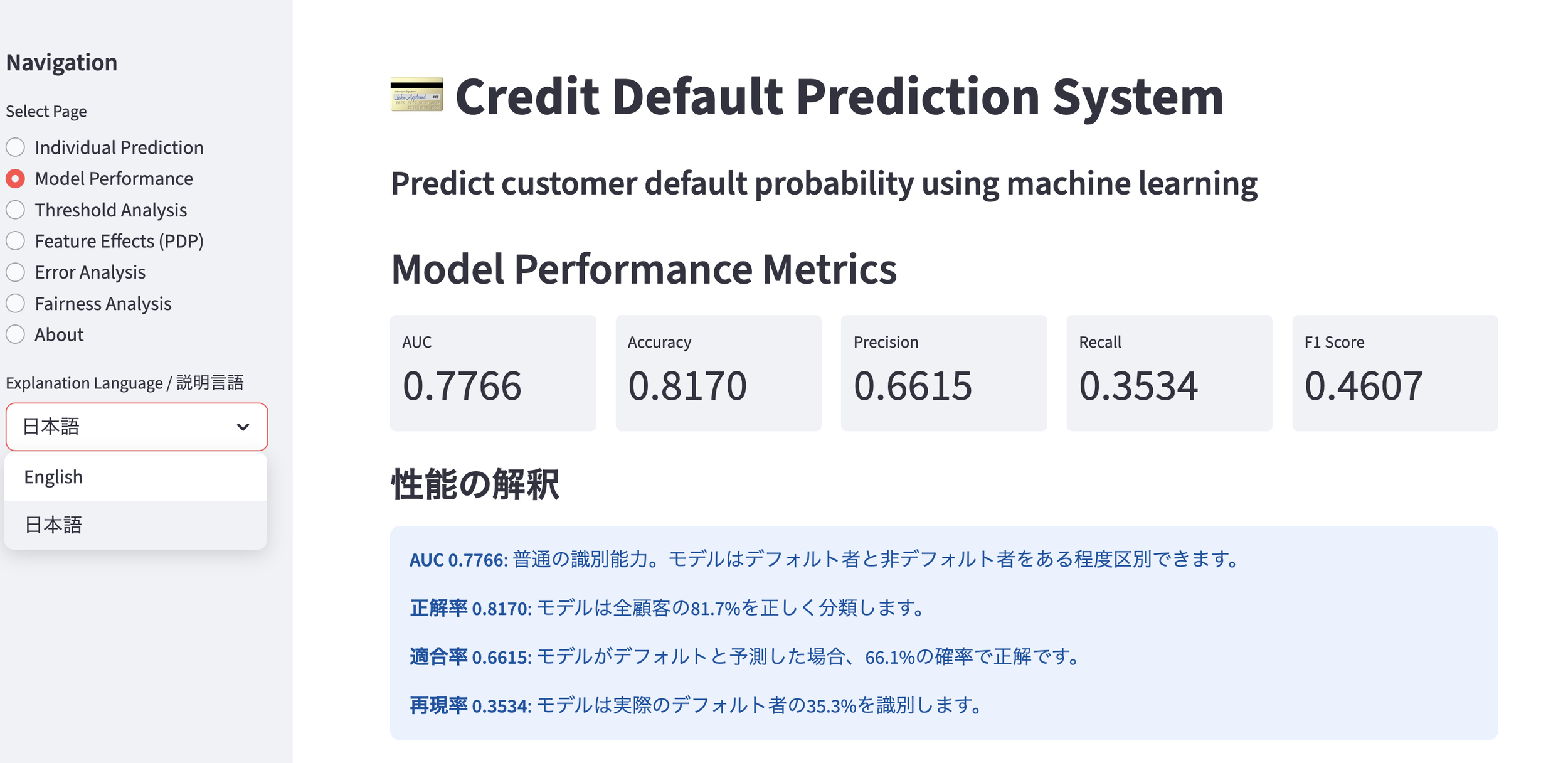

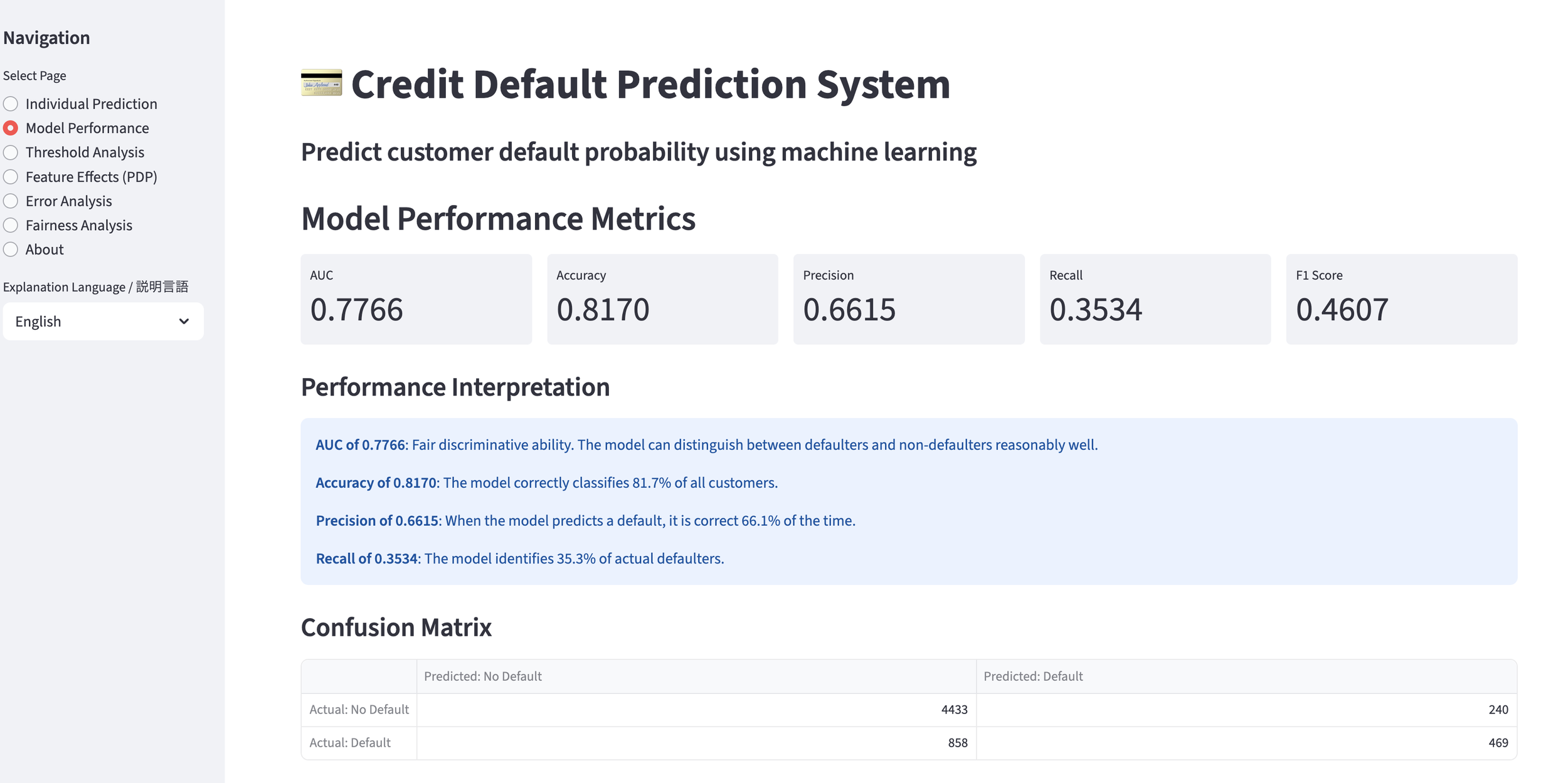

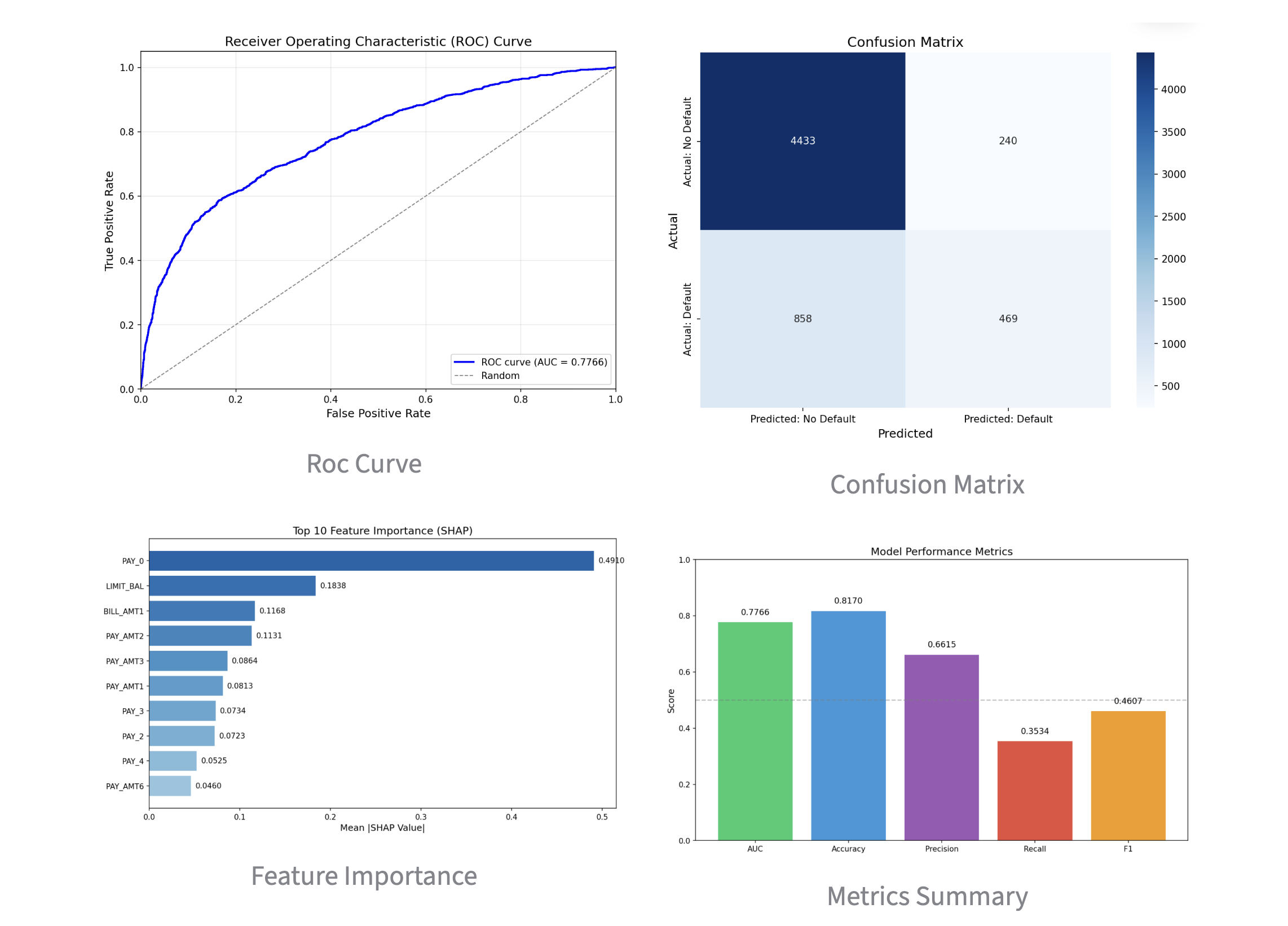

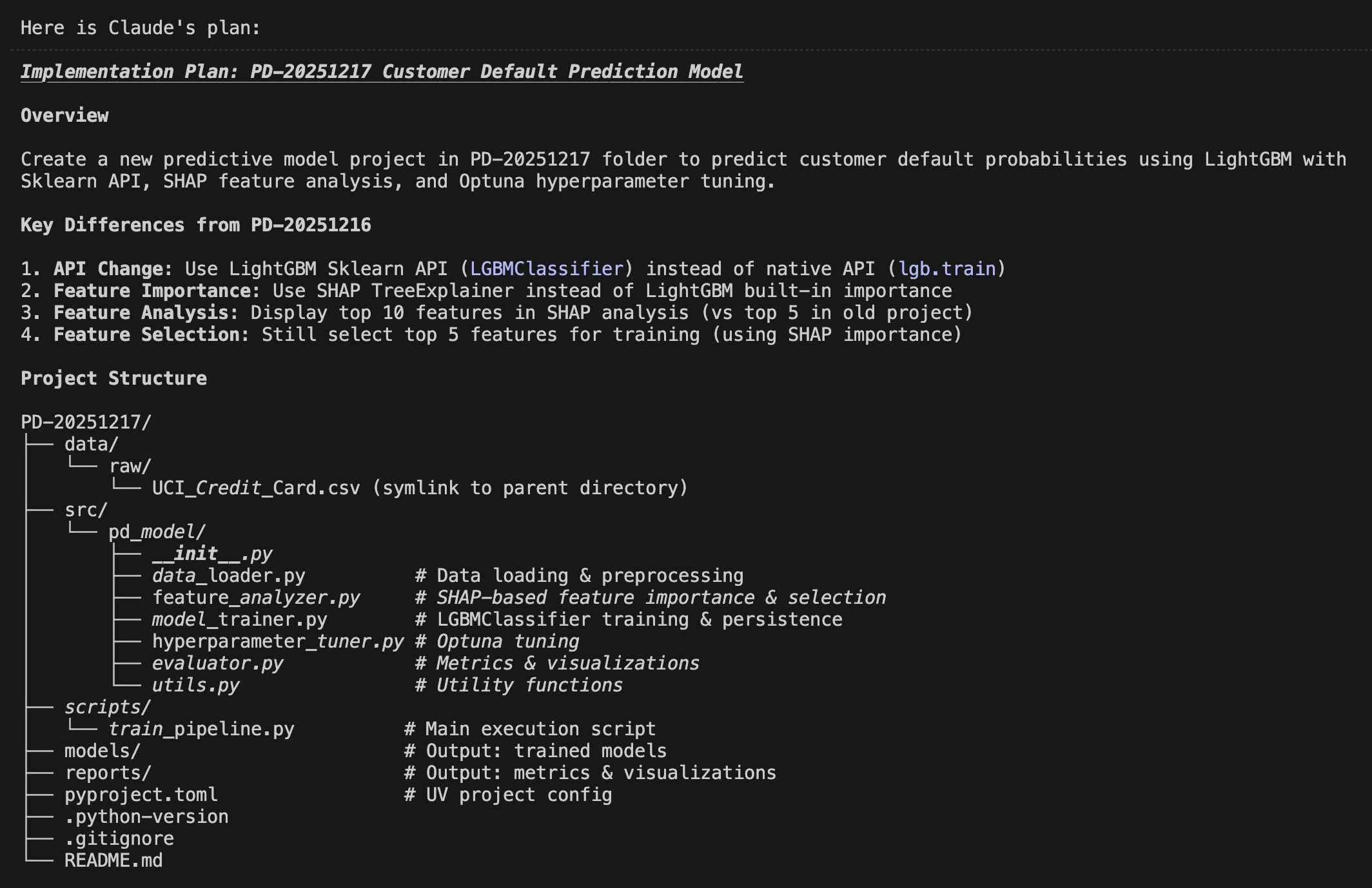

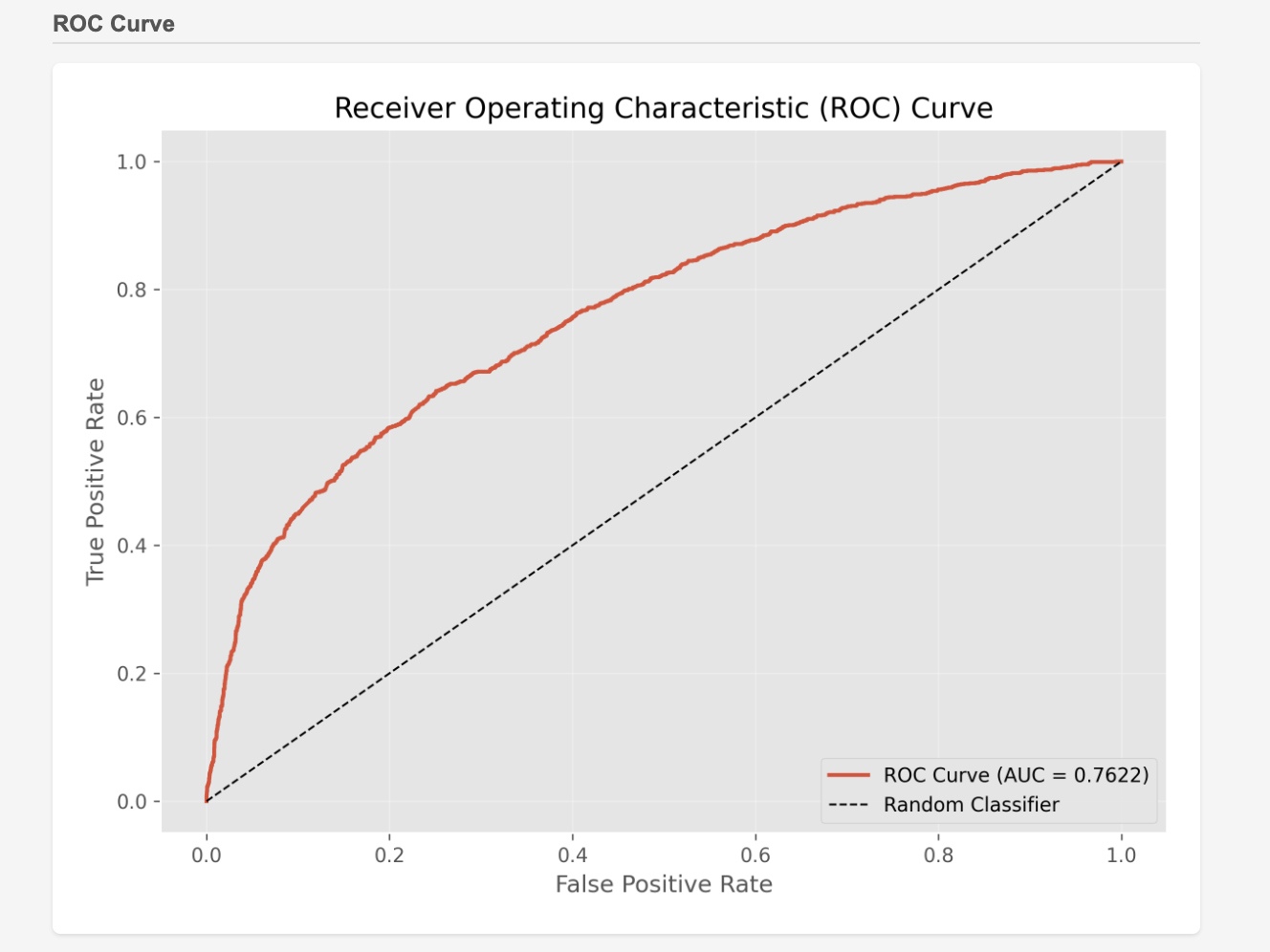

A prime example is Claude Code, released by Anthropic last year. This revolutionary product is currently taking the software development world by storm. It is no exaggeration to say that the common refrain "I don’t code manually anymore" is a direct result of this tool. In fact, I recently used it to tackle a past Kaggle competition; I achieved an AUC of 0.79 with zero manual coding, which absolutely stunned me (3).

2. AGI is Still 5 Years Away

On the other hand, Demis maintains his characteristically cautious stance. He often remarks that there is a "50% chance of achieving AGI in five years." His reasoning is grounded in the current limitations of AI: "Today’s AI isn't yet consistently superior to humans across all fields. A model might show incredible performance in one area but make elementary mistakes in another. This inconsistency means we haven't reached AGI yet." He believes two or three more major breakthroughs are required, which explains his longer timeline compared to Dario.

Unlike Anthropic, which is heavily optimized for coding and language, Google is focusing on a broader spectrum. One such focus is World Models—simulations of the physical spaces we inhabit. In these models, physics like gravity are reproduced, allowing the AI to better understand the "real" world. Genie 3 (2) is their latest version in this category. While it has only been released in the US so far, I am eagerly anticipating its global rollout. The "breakthroughs" Demis mentions likely lie at the end of this developmental path.

3. Are We Prepared for AGI?

While their timelines differ, Dario and Demis agree on one fundamental point: AGI—which will surpass human capabilities in every field—is not far off. Exactly ten years ago, in March 2016, DeepMind’s AlphaGo defeated the world’s top Go professional. Since then, no human has been able to beat AI in the game of Go. Soon, we may reach a point where humans can no longer outperform AI in any field. What we are seeing in the world of coding today is the precursor to that shift.

It is a world that is difficult to visualize. Industrial structures will be upended, and the very role of "human work" will change. It is hard to say that we are currently prepared for this reality. In 2026, we must begin a serious global dialogue on how to adapt. I look forward to engaging in these discussions with people around the world.

I highly recommend watching the full interview with Dario and Demis. These two individuals hold the keys to our collective future. That’s all for today. Stay tuned!

1) The Day After AGI | World Economic Forum Annual Meeting 2026, World Economic Forum, Jan 21, 2026

2) Genie 3, Google DeepMind, Jan 29, 2026

3) Is agentic coding viable for Kaggle competitions?, January 16, 2026

You can enjoy our video news ToshiStats-AI from this link, too!

Copyright © 2026 Toshifumi Kuga. All right reserved

Notice: ToshiStats Co., Ltd. and I do not accept any responsibility or liability for loss or damage occasioned to any person or property through using materials, instructions, methods, algorithms or ideas contained herein, or acting or refraining from acting as a result of such use. ToshiStats Co., Ltd. and I expressly disclaim all implied warranties, including merchantability or fitness for any particular purpose. There will be no duty on ToshiStats Co., Ltd. and me to correct any errors or defects in the codes and the software.