Recently, OpenAI CEO Sam Altman commented on YouTube(1) that 'it is plausible that a legitimate AI Researcher will be achieved by March 2028.' Can this really be achieved in such a short time, less than 2.5 years from now? I would like to consider this deeply, comparing it with the statements of Demis Hassabis, CEO of rival company Google DeepMind.

Achieving Legitimate AI Researcher by March 2028

As for when Artificial General Intelligence (AGI)—which would surpass human intelligence—will actually be achieved, opinions are divided even among experts. Amidst this, OpenAI CEO Sam Altman commented, referencing the following timeline, that 'It is a plausible that a legitimate AI Researcher will be achieved by March 2028.'"

Of course, this is an internal goal, and he isn't claiming it's AGI. However, if AI can take on the role of a researcher, technological development will accelerate dramatically, and the current industrial structure will likely change completely. I think it's groundbreaking that they have set a timeline for such a high-impact goal. The issue is its feasibility. Although technical points were discussed in this YouTube video, I felt that alone was insufficient to explain its feasibility. There is likely much that cannot be disclosed as it is confidential information, but it would have been better if there had been a more in-depth explanation.

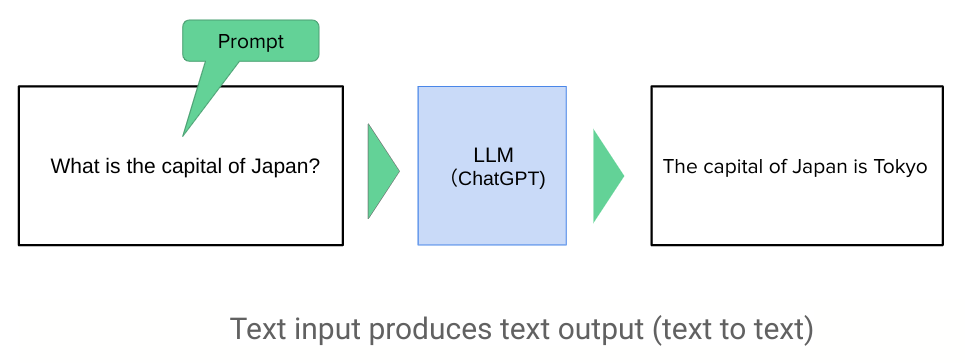

2. Current AI Lacks Consistency

At this point, let's introduce the opinion(2) of Google DeepMind CEO Demis Hassabis regarding the realization of AGI. As you know, he is a co-founder of DeepMind and has aimed to develop AGI since its founding in 2010. Despite that extensive experience, he says it will still take 5 to 10 years to achieve AGI. One reason for this is that 'current generative AI exhibits PhD-level capabilities for some tasks, yet at other times, it can make mistakes on simple high school math.' In short, its abilities 'lack consistency.' . 'Consistency' is essential for achieving AGI, and apparently, two or three more breakthroughs will be necessary to get there. I find this to be a rather cautious view. For other points of discussion, please watch the YouTube video(2).

3. AI is Steadily Evolving, Step by Step

Although there are differences in their definitions of AGI and their timelines, both parties seem to agree on its eventual realization. We cannot predict when breakthroughs will occur. I believe the only thing we should do is 'prepare for the emergence of AGI.' . Whether it arrives in 2028 or 10 years from now, we need to start preparing now how we can use AGI—considered humanity's greatest invention—to realize a better society, industry, and life. Even as we speak, AI is likely evolving beneath the surface. Our company, ToshiStats, intends to continue discussions in order to successfully incorporate those advancements.

You can enjoy our video news ToshiStats-AI from this link, too!

1) Sam, Jakub, and Wojciech on the future of OpenAI with audience Q&A, OpenAI, 30 Oct 2025

2) Google DeepMind CEO Demis Hassabis on AI, Creativity, and a Golden Age of Science | All-In Summit, 13 Sep 2025

Copyright © 2025 Toshifumi Kuga. All right reserved

Notice: ToshiStats Co., Ltd. and I do not accept any responsibility or liability for loss or damage occasioned to any person or property through using materials, instructions, methods, algorithms or ideas contained herein, or acting or refraining from acting as a result of such use. ToshiStats Co., Ltd. and I expressly disclaim all implied warranties, including merchantability or fitness for any particular purpose. There will be no duty on ToshiStats Co., Ltd. and me to correct any errors or defects in the codes and the software.